New study finds music can be reconstructed from brain recordings

Researchers have demonstrated that recognizable music can be reconstructed from the recorded neural activity of patients listening to the same song according to a new study.

The finding is a big one for science since it shows that those who suffer from neurodegenerative diseases and conditions might someday be able to use their own voices again, a news release on the research explained.

Past research has shown computer modeling could be used to decode and reconstruct human speech but replicating this with music was not something scientists knew how to do.

A predictive model that could identify pitch, melody, harmony, and rhythm as well as the areas of the brain involved in its processing was lacking according to the news release.

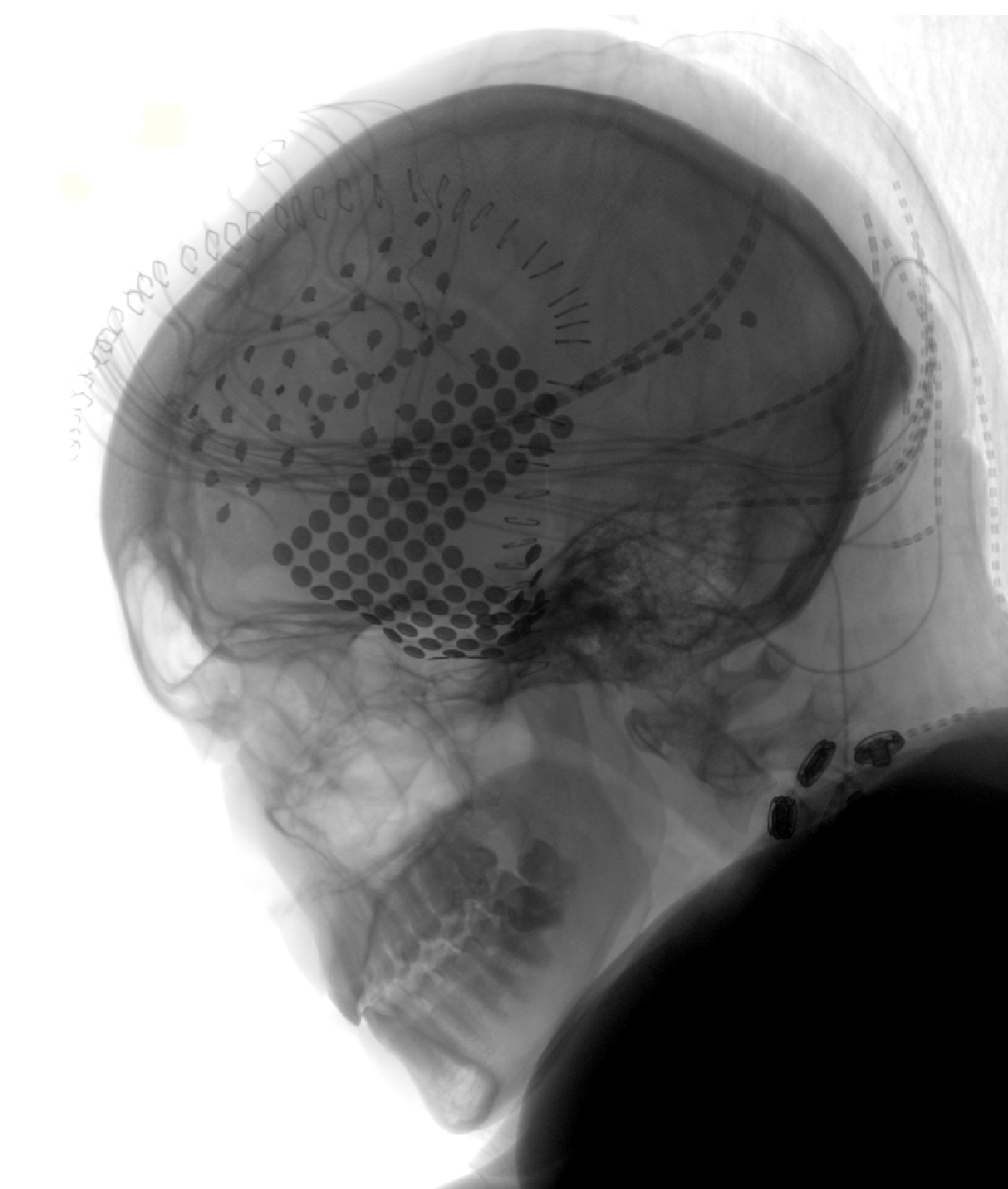

However, scientists at the University of California Berkeley were able to develop a model by decoding the brain activity of 29 patients who were outfitted with 2668 electrodes.

Photo Credit: Wiki Commons

It’s important to note here that the patients were listening to Pink Floyd’s 'Another Brick in the Wall, Part 1' when the researchers captured the recordings of their brain activity.

Photo Credit: Wiki Commons

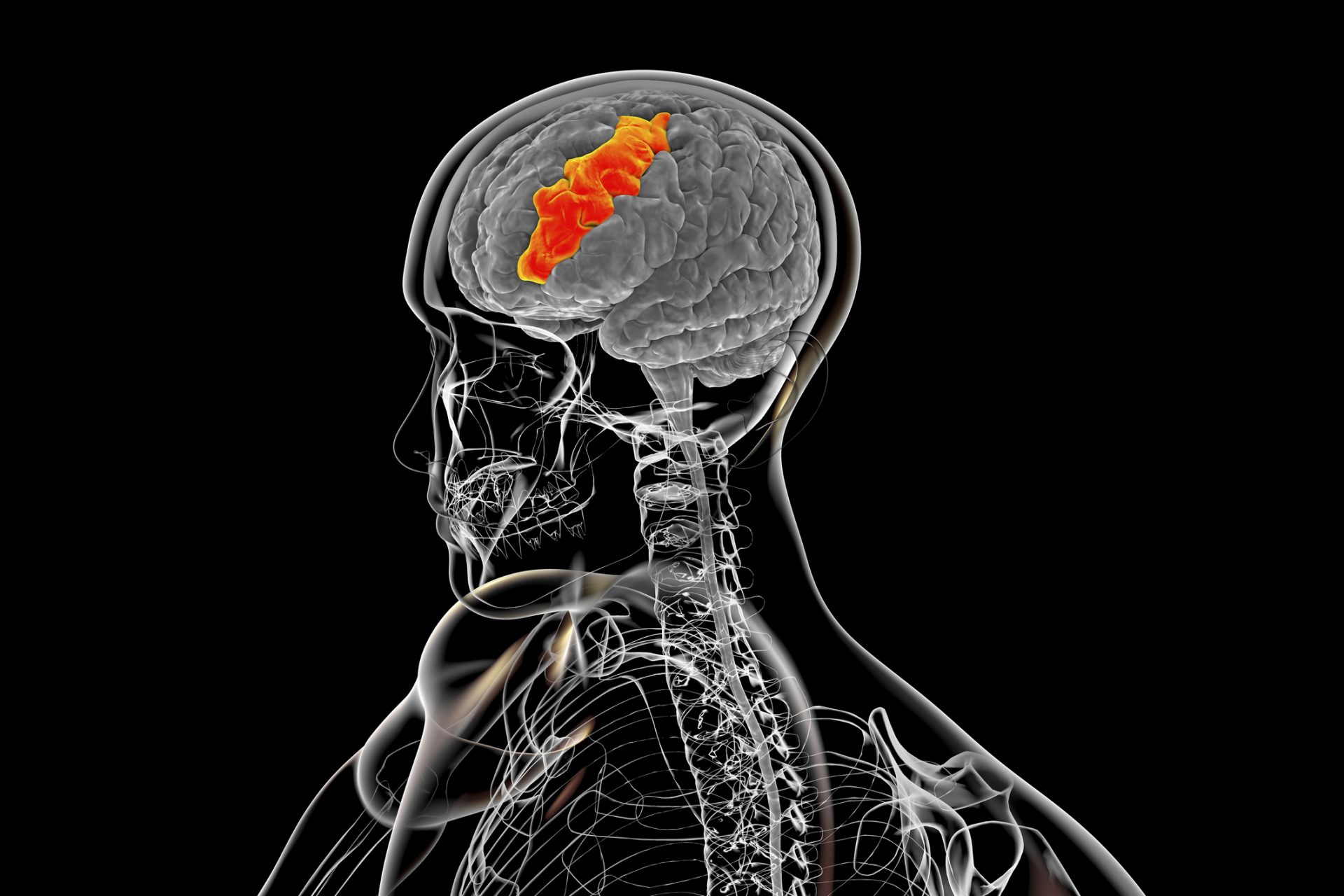

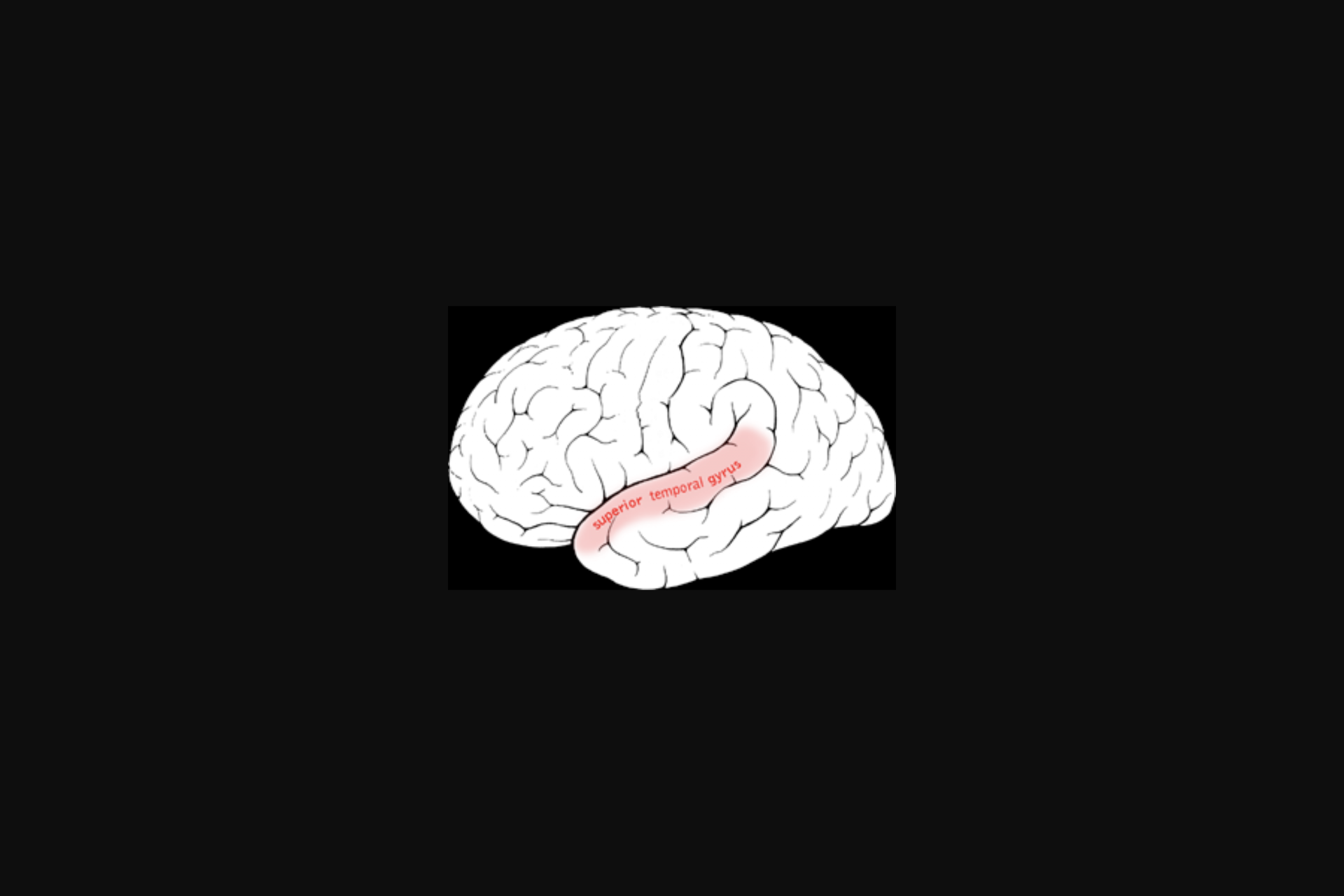

From the recordings, the researchers were able to identify three regions of the brain involved in the processing of music, the Superior Temporal Gyrus (STG), the Sensory-Motor Cortex (SMC), and the Inferior Frontal Gyrus (IFG).

Photo Credit: Wiki Commons

Analysis of the data showed there was a unique region in the Superior Temporal Gyrus that was responsible for rhythm, and the news release noted that in the case of Another Brick in the Wall, the area resonated with the guitar rhythm in the song.

Photo Credit: Wiki Commons

The researchers also found when they removed the electrodes in certain spots it would degrade the quality of the sound that they could recreate, which is how they determined which areas of the brain played a crucial role in perceiving music.

Photo Credit: Peter Brunner of Albany Medical College in New York and Washington University included in the University of California Berkley Press Release

"It's a wonderful result," University of California Berkeley psychology professor Robert Knight explained in a separate press release. Knight was a co-author of the study and noted that the findings could have a big impact on the quality of life of some patients.

Photo Credit: Wiki Commons

“As this whole field of brain-machine interfaces progresses, this gives you a way to add musicality to future brain implants for people who need it,” Knight explained.

Knight went on to say that people suffering from Amyotrophic Lateral Sclerosis (ALS) or other disabling neurological diseases could someday see the musicality of their speech returned with the findings this new research made.

Photo Credit: Wiki Commons

“It gives you an ability to decode not only the linguistic content, but some of the prosodic content of speech, some of the effect. I think that's what we've really begun to crack the code on,” Knight added.

However, current technology cannot reproduce what the brain thinks fast enough according to Knight, who noted it could take as long as 20 seconds to reproduce what’s happening in the brain—which just isn’t fast enough to be of use for people today.

"Noninvasive techniques are just not accurate enough today,” explained study co-author Ludovic Bellier, who added that placing electrodes on the other side of the skull could be more helpful.

“Let's hope, for patients, that in the future we could, from just electrodes placed outside on the skull, read activity from deeper regions of the brain with a good signal quality. But we are far from there," Bellier added.

More for you

Top Stories